On the evenings of October 30 and November 1, the 2nd @ World "Touching Academic Frontiers" international exchange event of the School of Computer Science (SCS) in the 2023 autumn semester was successfully held. This event invited four outstanding students from the SCS, Zhang Ao, Zhang Yongmao, Song Kun, and Wang Qing. They had in-depth communications with the classmates present around their respective research directions, sharing their latest research results and experiences. This event provides the students with a more refined and specialized academic exchange platform, making the @ World "Touching Academic Frontiers" series activities more diverse.

Zhang Ao shared his ICASSP participation experience and gave a brief introduction to the paper on audio-visual keyword spotting. In the report, Zhang Ao provided detailed information on the performance degradation of the Keyword Spotting (KWS) system based on audio modality in far-field and noisy interference situations. To address this issue, their team proposed an end-to-end keyword spotting method (VE-KWS) that utilizes visual information to enhance performance. This method improves the accuracy of keyword spotting by combining visual features with audio features.

In his report, Zhang Yongmao shared his experience of participating in Interspeech and the end-to-end singing voice synthesis system. This report explores the end-to-end vocal synthesis (SVS) model VISinger and its improved version VISinger 2. The original VISinger model has fewer parameters compared to traditional two-stage vocal synthesis systems, but there are some problems, such as text to phase, spectral break, and low sampling rate. To address these issues, Zhang and his team combined digital signal processing (DSP) methods with VISinger and proposed the VISinger 2 model. This new model is capable of synthesizing singing voice at 44.1 kHz, bringing richer expression and better audio quality.

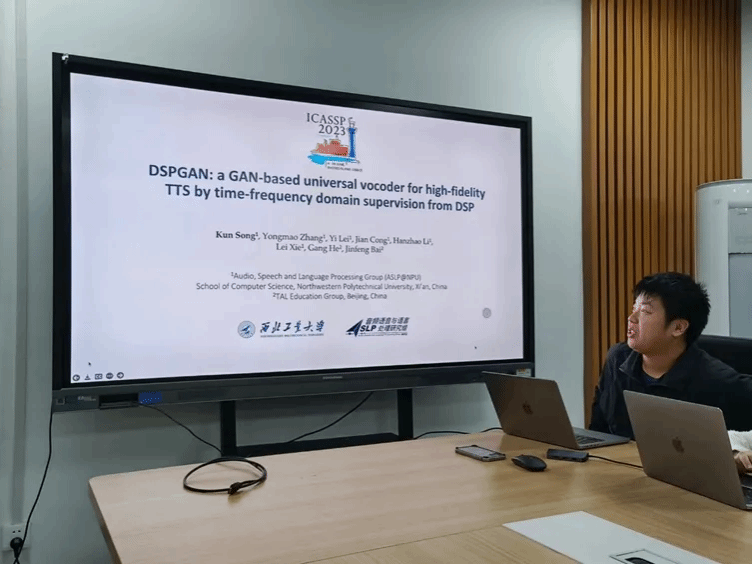

Song Kun shared his experience of attending the CASSP conference and introduced the team's paper on robust neural vocoders. In the report, Song Kun introduced the team's research achievement - DSPGAN, a digital signal processing vocoder based on generative adversarial networks. This technology has shown great potential in speech synthesis, especially in the monitoring methods in the time and frequency domains. He detailed in the report how to use GAN based DSP to improve the speech synthesis quality of the vocoder, give it more high fidelity. Particularly, he emphasized one point: many existing GAN based vocoders tend to generate noise, discontinuity, and insufficient sound when used to synthesize speech, and his DSPGAN demonstrates good superiority in solving these problems.

Wang Qing shared a timbre-preserved black box adversarial attack method for speaker recognition based on pseudo twin networks. In the paper, Wang and her team proposed a timber-preserved adversarial attack method for speaker recognition, which can not only exploit the weaknesses of the voiceprint model, but also preserve the target speaker's timbre under black box attack conditions. To this end, she added adversarial constraints during the training of the Voice Conversion (VC) model, thereby generating fake audio with preserved timbre. Then, the pseudo connected network architecture was used to learn from the black box speaker recognition model, while constraining intrinsic similarity and structural similarity. Finally, relatively ideal effects were achieved.

The great success of the @ World "Touching Academic Frontiers" international exchange activity showcases the outstanding students of the SCS participating in international conferences. In the future, the SCS will continue to organize more series of international exchange activities, providing opportunities and platforms for the teachers and students to learn about international academic trends and broaden their research perspectives, injecting new impetus into inspiring academic research innovation among students.